I upgraded my home lab from VMware vSphere 5.0 U1 to vSphere 5.1 today, using the GA bits that became available late yesterday, 10-September-2012.

vCenter Server

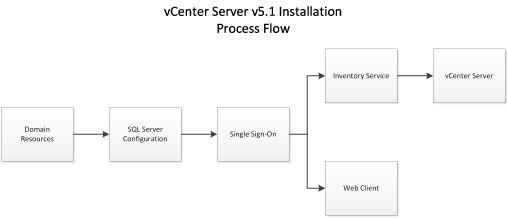

As with all vSphere upgrades, the first task was to upgrade the vCenter Server instance. This is also the first place that you'll see changes from the installs that were familiar from v4, forward.

|

| Install Screen for VMware vCenter Server |

The first thing you notice is that two other options are prerequisites to installing vCenter Server: vCenter Single Sign On (SSO) and Inventory Service.

VMware does you the favor of offering an option to "orchestrate" the install of the two items prior to installing vCenterServer, but in doing so, it also keeps you from accessing all of the installation options (like HA-enabled SSO) available in the prerequisite individual installs.

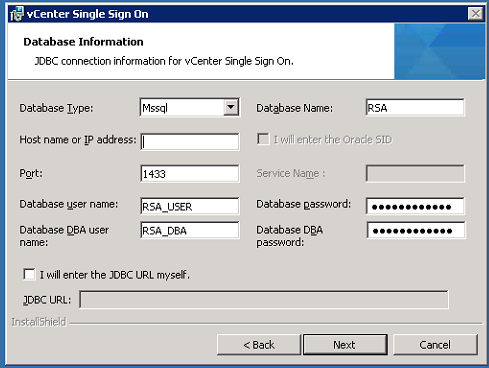

The next hiccup that I encountered was the requirement for the SSO database. Yes, it does support Microsoft SQL, and yes, it can install an instance of Express for you. But if you'd like to use the database instance that's supporting the current vCenter database, you'll have two additional steps to perform, outside of the VMware installer:

1) Run the database creation script (after editing it to use the correct data and logfile locations for the instance)

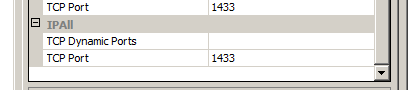

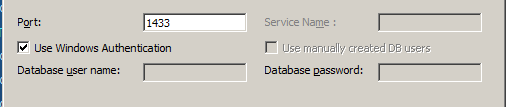

2) Reset the instance to use a static port.

It is documented as a prerequisite for installing the SSO service that the SQL server requires a static port. But why? Because it relies on JDBC to connect to the database, and JDBC doesn't understand Named Instances, dynamic ports or the Browser Service and SQL Server Resolution Protocol.

|

| Note the lack of options for named instances. |

If you did like many folks and used the default install of SQL Express as your database engine, you have the annoyance of needing to stop what you're doing and switch your instance (VIM_SQLEXP, if using the default) to an unused, static port. In order for that setting to take effect, you must restart the SQL Server instance. Which also means your vCenter services will need to be restarted.

Again: this is a documented requirement in the installation guide, yet another reason to read through it before jumping in...

Once you have SSO installed, the Inventory Service is recognized as a upgrade to the existing service, as is the vCenter Server itself. Nothing really new or unique in adding these services, with the exception of providing the master password you set during the SSO installation.

Then the ginormous change: you've probably been installing the Web Client out of habit, but now you

really need to do so if you'd like to take advantage of any new vSphere 5.1 features that are exposed to the user/administrator (like shared-nothing vMotion).

But don't stop there! Make sure you install the vCenter Client as well. I don't know which plug-ins for the Client are supposed to be compatible with Web Client, but the ones I use the most—Update Manager and VMware Data Recovery—are still local client only.

That's right: Update Manager 5.1—which is also one of the best ways to install ESXi upgrades for small environments that aren't using AutoDeploy or other advanced provisioning features—can only be managed and operated from the "fat" client.

Finally, one positive note for this upgrade: I didn't have to change out any of my 5.0 license keys for either vCenter Server. As soon as vCenter was running, I jumped into the license admin page and saw that my existing license was consumed for the upgraded server, and no new "5.1" nodes in eval mode were present.

ESXi

Once vCenter is upgraded and running smoothly, the next step is to upgrade your hosts. Again, for small environments (which is essentially 100% of those I come across), Update Manager is the way to go. The 5.1 ISO from VMware is all you need to create an upgrade baseline, and serially remediating hosts is the safest way to roll out your upgrades.

Like the vCenter Server upgrade, those 5.0 license keys are immediately usable in the new host, but with an important distinction: as near as I can tell, those old keys are still "aware" of their "vTax" limitations. It doesn't show in the fat client, but the web client clearly indicates a "Second Usage" and "Second Capacity" with "GB vRAM" as the units.

|

| vRAM limits still visible in old license key: 6 x 96 = 576. |

I can only assume that converting old vSphere 5 to vCloud Suite keys will replace that "Second Capacity" value with "Unlimited" or "N/A"; if you've got a big environment or host Monster VMs, you'll want to get those new keys as soon as possible to eliminate the capacity cap.

The upgrade itself was pretty painless for me. I run my home lab on a pair of Dell PE2950 III hosts, and there weren't any odd/weird/special VIBs or drivers with which to contend.

Update: vCloud Suite keys did NOT eliminate the "Second Capacity" columns; vRAM is still being counted, and the old v5.0 entitlement measures are being displayed.

Virtual Machines

The last thing you get to upgrade is your VMs. As with any upgrade to vSphere (even some updates), VMware Tools becomes outdated, so you'll work in some downtime to upgrade to the latest version. Rumors to contrary, upgrading Tools

will require a reboot in Windows guests, at least to get the old version of Tools out of the way.

vSphere 5.1 also comes with a new VM Hardware spec (v9) which you can optionally upgrade to as well. Like previous vHardware upgrades, the subject VM will need to be powered off. Luckily, VMware has added a small bit of automation to this process, allowing you to schedule the upgrade the next time the guest is power-cycled or cleanly shut down.